Replacing a Control Card

This appendix describes how to replace a control card in a GigaVUE‑HC2. Since there is only one control card, and it is internal, the unit must be powered down for any first time installation or remove and replacement.

Use this appendix for replacing an existing control card with the same control card.

Use the following appendix for replacing an existing control card with the GigaVUE‑HC2 Control Card version 2 (HC2 v2): Upgrading to HC2 CCv2.

| Figure 1 | Replacing a Control Card |

- Log in to the serial console port on the node with the control card to be replaced and power it down gracefully with reload halt command, confirming your decision when prompted, as follows:

|

Node_A [100: standby] (config) # reload halt Confirm reboot/halt? [no] yes Halting system... Node_A [100: standby] (config) # System shutdown initiated -- logging off. Gigamon GigaVUE H Series Chassis INIT:Stopping pm: [ OK ] Shutting down kernel logger: [ OK ] Shutting down system logger: [ OK ] Starting killall: Shutting down TFTP server: [ OK ] [ OK ] Sending all processes the TERM signal... Sending all processes the KILL signal... Remounting root filesystem in read-write mode: Saving random seed: Syncing hardware clock to system time Running vpart script: Unmounting file systems: Remounting root filesystem in read-only mode: Running vpart script: Halting system... sd 0:0:0:0: [sda] Stopping disk Power down. |

- If the GigaVUE‑HC2 is a member node, from the SSH session to the VIP/Leader IP address, check the cluster’s operating parameters with the show chassis and show cluster global commands, as follows. Record the values shown for the Cluster ID, Cluster Name, and Cluster Management IP – for use when reconfiguring the node with its new control card.

|

Box# Hostname Config Oper Status HW Type Product# Serial# HW Rev SW Rev --------------------------------------------------------------------------------------------------------------------------------------- 2 * Node_B yes up HC2-Chassis 132-0098 80103 A3 2.7.0 1 - yes down HC2-Chassis 132-0098 80018 - - Node_B [100: leader] (config) # show cluster global

Cluster name: 100 Management IP: 10.150.52.232/24

Cluster node count: 1

Node Status: Node ID: 2 <--- (local node) Host ID: ecde217e8354 Hostname: Node_B Box-id: 2 Uptime: 0d 00h 23m 00s CC1/CC2 Dynamic Sync status: in sync Node Role:leader Node State: online Node internal address: 169.254.126.51, port: 60102 Node external address: 10.150.52.10 Recv. Heartbeats from: -1 Send Heartbeats to: -1 Node_B [100: leader] (config) # |

- In a stand-alone GigaVUE‑HC2 use show running-config command, backup the entire system configuration to a text file, which can be used to reconfigure the new control card.

- Power down the unit.

- Remove the electrical connections from the unit.

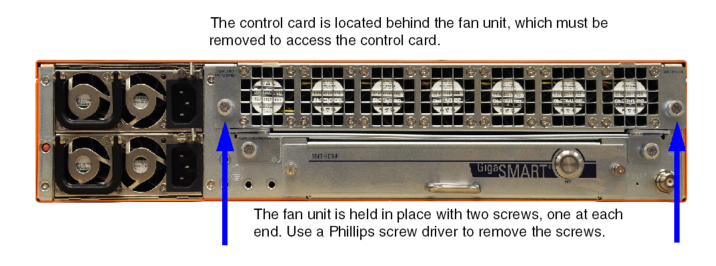

- Using a Phillips screw driver, remove the screws holding the fan unit at the back of the GigaVUE‑HC2.

- Remove the fan unit from the back of the unit.

- Be sure to use an ESD grounding strap on your wrist or ankle before touching any cards.

- Slide the control card out from the back side of the unit.

- Slide the new control card into the GigaVUE‑HC2.

- Power-up the node.

- Log on to the node over the console port.

- Switch to Configure mode.

| a. | Type en <Enter> to switch to Enable mode. |

| b. | Type config t <Enter> to switch to Configure mode. |

- Run the jump-start script with the following command if it does not appear automatically:

(config) # config jump-start

- Follow the jump-start script’s prompts to reconfigure the node with the settings for the existing cluster you recorded in Step , as follows.

|

Gigamon GigaVUE H Series Chassis gigamon-0d04f1 login: admin Gigamon GigaVUE H Series Chassis GigaVUE‑OS configuration wizard Do you want to use the wizard for initial configuration? yes Step 1: Hostname? [gigamon-0d04f1] Node_A Step 2: Management interface? [eth0] Step 3: Use DHCP on eth0 interface? no Step 4: Use zeroconf on eth0 interface? [no] Step 5: Primary IPv4 address and masklen? [0.0.0.0/0] 10.150.52.2/24 Step 6: Default gateway? 10.150.52.1 Step 7: Primary DNS server? 192.168.2.20 Step 8: Domain name? gigamon.com Step 9: Enable IPv6? [yes] Step 10: Enable IPv6 autoconfig (SLAAC) on eth0 interface? [no] Step 11: Enable DHCPv6 on eth0 interface? [no] Step 12: Enable secure cryptography? [no] Step 13: Enable secure passwords? [no] Step 14: Minimum password length? [8] Step 15: Admin password? Please enter a password. Password is a must. Step 15: Admin password? Step 15: Confirm admin password? Step 16: Cluster enable? [no] yes Step 17: Cluster interface? [eth2] Step 18: Cluster id (Back-end may take time to proceed)? [default-cluster] 100 Step 19: Cluster name? [default-cluster] 100 Step 20: Cluster Leader Preference (strongly recommend the default value)? [60] Step 21: Cluster mgmt IP address and masklen? [0.0.0.0/0] 10.150.52.232/24

You have entered the following information: 1. Hostname: Node_A 2. Management interface: eth0 3. Use DHCP on eth0 interface: no 4. Use zeroconf on eth0 interface: no 5. Primary IPv4 address and masklen: 10.150.52.2/24 6. Default gateway: 10.150.52.1 7. Primary DNS server: 192.168.2.20 8. Domain name: gigamon.com ...

To change an answer, enter the step number to return to. Otherwise hit <enter> to save changes and exit.

Choice:

Configuration changes saved. To return to the wizard from the CLI, enter "configuration jump-start" command from configure mode. Launching CLI...

*** Warning: This system is a member of a cluster. Shared configuration must be changed on the cluster leader.

Cluster ID: 100 Cluster name: 100 Management IP: 10.150.52.232/24 Cluster leader IF: eth0 Cluster node count: 2 Local name: Node_A Local role: standby Local state: online Leader address: 10.150.52.10 (ext) 169.254.126.51 (int) Leader state: online |

The serial number from the Node_A chassis is still in the cluster’s global configuration database. When the cluster detects Node_A trying to join the cluster with a serial number it recognizes, it automatically configures the node with the box ID and packet distribution configuration associated with that serial number before the control card was replaced.

- Next, run a series of show commands to verify that the node and cluster are operating as expected:

- From the VIP/Leader IP address, run show version box-id <id> to make sure the correct images are loaded on the node. For example:

|

Node_B [100: leader] (config) # show version box-id 1

=== Box-id : 1 (Role: standby) === Hostname: Node_A === Installed images: Partition 1: (cur, next) GigaVUE-H Series 2.7.0 #103-dev 2013-02-15 05:09:08 ppc gvcc2 build_master@BuildMaster:svn17810 Partition 2: GigaVUE-H Series 2.7.0 #103-dev 2013-02-15 05:09:08 ppc gvcc2 build_master@BuildMaster:svn17810

U-Boot version: 2011.06.10

|

- From the VIP/Leader IP address, run the show cluster global and show chassis commands to assure yourself that the cluster is stable and reliable. For example:

|

Node_B [100: leader] (config) # show cluster global Cluster ID: 100 Cluster name: 100 Management IP: 10.150.52.232/24 Cluster leader IF: eth0 Cluster node count: 2

Node Status: Node ID: 4 Host ID: 6c9834bec584 Hostname: Node_A Box-id: 1 Uptime: 0d 00h 02m 55s CC1/CC2 Dynamic Sync status: in sync Node Role: standby Node State: online Node internal address: 169.254.46.92, port: 51612 Node external address: 10.150.52.2 Recv. Heartbeats from: 2 Send Heartbeats to: 2 Node_B [100: leader] (config) # show chassis Box# Hostname Config Oper Status HW Type Product# Serial # HW Rev SW Rev ------------------------------------------------------------------------------------------------------------------------------------- 1 Node_A yes up HC2-Chassis 132-0098 80018 2.0 2.7.0 [100: leader] (config) #

|

- From the VIP/Leader IP address, run the show card command to make sure that all cards are up. For example:

- You can also clear the port stats on the cluster stack-links and then run show port stats to verify that the packet counts are incrementing between the cluster nodes. For example:

|

Node_B [100: leader] (config) # clear port stats port 2/1/x6,1/3/x1

Counter Name Port: 1/3/x1 ================== ================ ================ IfInOctets: IfInUcastPkts: 0 IfInNUcastPkts: 0 IfInPktDrops: 0 IfInDiscards: 0 IfInErrors: 0 IfInOctetsPerSec: 0 IfInPacketsPerSec: 0

Node_B [100: leader] (config) # show port stats port 2/1/x6,1/3/x1

Counter Name Port: 2/1/x6 Port: 1/3/x1 ================== ================ ================ IfInOctets: 2017430592 IfInUcastPkts: 31522353 IfInNUcastPkts: 0 IfInPktDrops: 0 IfInDiscards: 0 IfInErrors: 0 IfInOctetsPerSec: 0 IfInPacketsPerSec: 0

|